|

Magnitudes

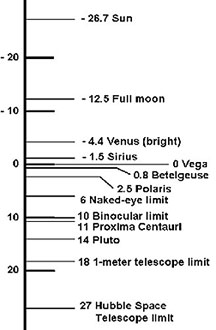

Magnitudes of Selected Objects

|

The method we use today to compare the apparent brightness (magnitude)

of stars began with Hipparchus, a Greek astronomer who lived in the

second century BC. Hipparchus called the brightest star in each

constellation "first magnitude." Ptolemy, in 140 A.D., refined

Hipparchus' system and used a 1 to 6 scale to compare star brightness,

with 1 being the brightest and 6 the faintest. This is similar to

the system used in ranking tennis players, etc. First rank is better

than second, etc. Unfortunately, Ptolemy did not use the brightest

star, Sirius, to set the scale, so it has a negative magnitude.

(Imagine being ranked -1.5 in the tennis rankings!)

Astronomers in the mid-1800's quantified these numbers and modified

the old Greek system. Measurements demonstrated that 1st magnitude

stars were 100 times brighter than 6th magnitude stars. It has also

been calculated that the human eye perceives a one-magnitude change

as being 2 and ½ times brighter, so a change in 5 magnitudes

would seem to be 2.5 to the fifth power (or approximately 100) times

brighter. Therefore a difference of 5 magnitudes has been defined

as being equal to a factor of exactly 100 in apparent brightness.

|